Supercharge Your Python with Caching: A Comprehensive Guide

Imagine dramatically speeding up your Python programs without significant code changes. That's the power of caching! Caching in Python acts like a memory for your program, storing the results of complex calculations so it doesn't have to repeat them. This leads to faster execution and improved efficiency, especially for computationally intensive tasks.

This article explores Python caching techniques, showing you how to leverage this powerful tool for smoother, faster applications.

Key Concepts:

- Grasp the core principles and advantages of Python caching.

- Master the

functools.lru_cachedecorator for straightforward caching. - Build custom caching solutions using dictionaries and libraries like

cachetools. - Optimize database queries and API calls with caching for enhanced performance.

Table of Contents:

- Introduction

- Understanding Caching

- When to Employ Caching

- Implementing Caching in Python

- Advanced Caching Techniques

- Real-World Applications

- Summary

- Frequently Asked Questions

What is Caching?

Caching involves saving the output of time-consuming or repetitive operations. Subsequent requests with identical parameters can then retrieve the stored result, avoiding redundant calculations. This significantly reduces processing time, particularly for computationally expensive functions or those called repeatedly with the same inputs.

When to Use Caching?

Caching shines in these situations:

- Functions with high computational costs.

- Functions frequently called with the same arguments.

- Functions producing unchanging, predictable results.

Implementing Caching with Python

Python's functools module provides the lru_cache (Least Recently Used cache) decorator. It's simple to use and highly effective:

Using functools.lru_cache

- Import the Decorator:

from functools import lru_cache

- Apply the Decorator:

Decorate your function to enable caching:

@lru_cache(maxsize=128)

def expensive_calculation(x):

# Simulate a complex calculation

result = x * x * x #Example: Cubing the input

return result

maxsize limits the cache size. Reaching this limit triggers removal of the least recently used entry. Setting maxsize=None creates an unbounded cache.

Example:

import time

@lru_cache(maxsize=None)

def fibonacci(n):

if n

<p><strong>Custom Caching Solutions</strong></p>

<p>For more intricate caching needs, consider custom solutions:</p>

<p><strong>Using Dictionaries:</strong></p>

<pre class="brush:php;toolbar:false">my_cache = {}

def my_expensive_function(x):

if x not in my_cache:

my_cache[x] = x * x * x #Example: Cubing the input

return my_cache[x]

Using cachetools:

The cachetools library offers diverse cache types and greater flexibility than lru_cache.

from cachetools import cached, LRUCache

cache = LRUCache(maxsize=128)

@cached(cache)

def expensive_function(x):

return x * x * x #Example: Cubing the input

Practical Applications

-

Database Queries: Cache query results to lessen database load and improve response times.

-

API Calls: Cache API responses to avoid rate limits and reduce latency.

Summary

Caching is a vital optimization technique for Python. By intelligently storing and reusing computation results, you can significantly enhance the performance and efficiency of your applications. Whether using built-in tools or custom solutions, caching is a powerful tool to improve your code's speed and resource utilization.

Frequently Asked Questions

Q1: What is caching?

A1: Caching saves the results of computationally expensive operations, reusing them for identical inputs to boost performance.

Q2: When should I use caching?

A2: Use caching for functions with significant computational overhead, those repeatedly called with the same arguments, and those producing consistent, predictable outputs.

Q3: What are some practical uses of caching?

A3: Caching is beneficial for optimizing database queries, API calls, and other computationally intensive tasks, leading to faster response times and reduced resource consumption.

The above is the detailed content of What is Python Caching?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

Here are ten compelling trends reshaping the enterprise AI landscape.Rising Financial Commitment to LLMsOrganizations are significantly increasing their investments in LLMs, with 72% expecting their spending to rise this year. Currently, nearly 40% a

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

Disclosure: My company, Tirias Research, has consulted for IBM, Nvidia, and other companies mentioned in this article.Growth driversThe surge in generative AI adoption was more dramatic than even the most optimistic projections could predict. Then, a

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

Those days are numbered, thanks to AI. Search traffic for businesses like travel site Kayak and edtech company Chegg is declining, partly because 60% of searches on sites like Google aren’t resulting in users clicking any links, according to one stud

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect

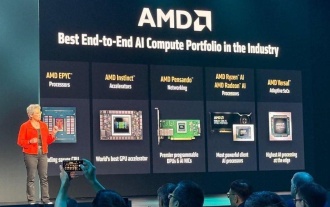

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h