An in-depth analysis of the MongoDB storage engine (with schematic diagram)

Dec 06, 2022 pm 05:00 PMThis article will introduce you to the relevant knowledge about mongodb and introduce the storage engine in MongoDB. I hope it will be helpful to you!

A brief review

Last time we talked about the mongodb cluster, which is divided into master-slave cluster and sharded cluster. For the shards in the sharded cluster, here is what is needed Pay attention to the following points, let’s review them together:

- For hot data

certain shard keys (shards A shard key is an index field or composite index field that exists in each document in the collection) will cause all read or write requests to operate on a single data block or shard, which will result in a single sharded server If the load is too heavy, the self-increasing shard key will easily cause writing problems [Recommendation: MongoDB video tutorial]

- For indivisible data blocks

For coarse-grained sharding keys, may result in many documents using the same sharding key

In this case, these documents cannot be split into multiple data blocks, this will limit mongodb's ability to evenly distribute data

- Forquery obstacles

Sharding keys and queries There is no correlation, which will cause poor query performance

For the above points, we must be aware of them. If we encounter similar problems in actual work, we can try to learn to deal with them

Today we will take a brief look at What is the storage engine of mongodb

Storage engine

When it comes to the storage engine of mongodb, we need to know that it is in mongodb 3.0 At that time, the concept of pluggable storage engine was introduced

Now there are mainly these engines:

- WiredTiger storage engine

- inMemory Storage Engine

When the storage engine first came out, the default was to use the MMAPV1 storage engine.

MMAPV1 engine, we can probably know it by looking at the name. He uses mmap to do it, and uses the principle of linux memory mapping

The MMAPV1 engine is not used now because the WiredTiger storage engine is better, for example, compare WiredTiger has the following advantages:

- WiredTiger Better performance in read and write operations

WiredTiger can better utilize the processing capabilities of multi-core systems

-

WiredTiger The lock granularity is smaller

The MMAPV1 engine uses table-level locks. When there are concurrent operations on a single table, the throughput will be affected. Limitations

WiredTiger uses document-level locks, which improves concurrency and throughput

- WiredTiger Better compression

WiredTiger uses prefix compression, which saves memory space consumption compared to MMAPV1

And WiredTiger also provides a compression algorithm, which can greatly reduce the impact on the hard disk. Resource consumption

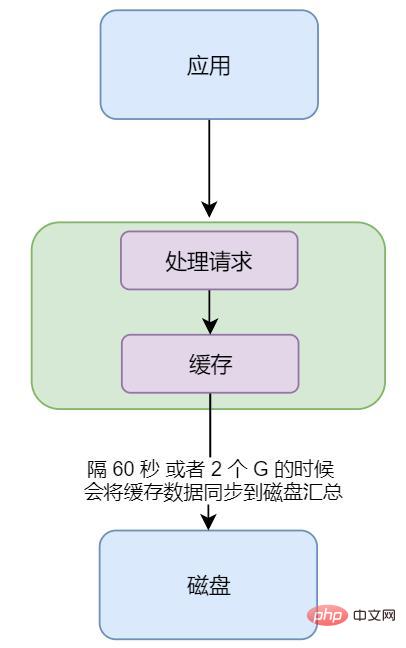

Writing principle of WiredTiger engine

We can see from the above figure that WiredTiger The principle of writing to disk is also very simple The

- application request comes to mongodb, mongodb handles it, and stores the result in the cache

- When the cache reaches 2 G , or when the 60 s timer expires, the data in the cache will be flushed to the disk.

Careful xdm will know, then if it is exactly 59 seconds now, more than 1 G, the data in the cache has not yet been synchronized to the disk, and mongodb hangs up abnormally. Then won't mongodb lose data?

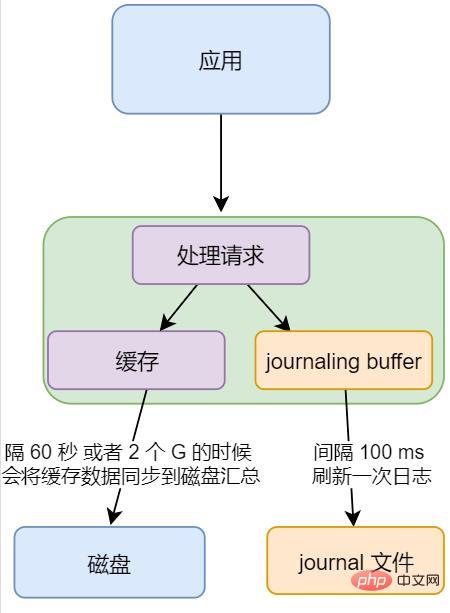

We can all think with our fingers, How could the designer of mongodb allow this situation to exist, then there must be a solution, as follows

As shown in the picture above, there is an extra journaling buffer and journal file

- journaling buffer

Buffer that stores mongodb addition, deletion and modification instructions

- journal file

Similar to the transaction log in a relational database

The purpose of introducing Journaling is:

Journaling can enable the mongodb database to quickly recover after an unexpected failure

Journaling log function

Journaling's The logging function looks a bit like the aof persistence in redis. It can only be said that it is similar

In mongodb 2.4, the Journaling logging function is enabled by default , when we start the mongod instance, the service will check whether the data needs to be restored

So there will be no mongodb data loss mentioned above

In addition, we need to know here that journaling’s logging function will write logs when mongodb needs to perform write operations, that is, when adding, deleting, or modifying, which will affect performance

But mongodb will not record the read operation in the cache , so it will not be recorded in the journaling log, so the read operation will have no impact

That’s it for today. What I have learned, if there is any deviation, please correct me

The above is the detailed content of An in-depth analysis of the MongoDB storage engine (with schematic diagram). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Use Composer to solve the dilemma of recommendation systems: andres-montanez/recommendations-bundle

Apr 18, 2025 am 11:48 AM

Use Composer to solve the dilemma of recommendation systems: andres-montanez/recommendations-bundle

Apr 18, 2025 am 11:48 AM

When developing an e-commerce website, I encountered a difficult problem: how to provide users with personalized product recommendations. Initially, I tried some simple recommendation algorithms, but the results were not ideal, and user satisfaction was also affected. In order to improve the accuracy and efficiency of the recommendation system, I decided to adopt a more professional solution. Finally, I installed andres-montanez/recommendations-bundle through Composer, which not only solved my problem, but also greatly improved the performance of the recommendation system. You can learn composer through the following address:

How to choose a database for GitLab on CentOS

Apr 14, 2025 pm 04:48 PM

How to choose a database for GitLab on CentOS

Apr 14, 2025 pm 04:48 PM

GitLab Database Deployment Guide on CentOS System Selecting the right database is a key step in successfully deploying GitLab. GitLab is compatible with a variety of databases, including MySQL, PostgreSQL, and MongoDB. This article will explain in detail how to select and configure these databases. Database selection recommendation MySQL: a widely used relational database management system (RDBMS), with stable performance and suitable for most GitLab deployment scenarios. PostgreSQL: Powerful open source RDBMS, supports complex queries and advanced features, suitable for handling large data sets. MongoDB: Popular NoSQL database, good at handling sea

MongoDB vs. Oracle: Understanding Key Differences

Apr 16, 2025 am 12:01 AM

MongoDB vs. Oracle: Understanding Key Differences

Apr 16, 2025 am 12:01 AM

MongoDB is suitable for handling large-scale unstructured data, and Oracle is suitable for enterprise-level applications that require transaction consistency. 1.MongoDB provides flexibility and high performance, suitable for processing user behavior data. 2. Oracle is known for its stability and powerful functions and is suitable for financial systems. 3.MongoDB uses document models, and Oracle uses relational models. 4.MongoDB is suitable for social media applications, while Oracle is suitable for enterprise-level applications.

MongoDB vs. Oracle: Choosing the Right Database for Your Needs

Apr 22, 2025 am 12:10 AM

MongoDB vs. Oracle: Choosing the Right Database for Your Needs

Apr 22, 2025 am 12:10 AM

MongoDB is suitable for unstructured data and high scalability requirements, while Oracle is suitable for scenarios that require strict data consistency. 1.MongoDB flexibly stores data in different structures, suitable for social media and the Internet of Things. 2. Oracle structured data model ensures data integrity and is suitable for financial transactions. 3.MongoDB scales horizontally through shards, and Oracle scales vertically through RAC. 4.MongoDB has low maintenance costs, while Oracle has high maintenance costs but is fully supported.

What is the CentOS MongoDB backup strategy?

Apr 14, 2025 pm 04:51 PM

What is the CentOS MongoDB backup strategy?

Apr 14, 2025 pm 04:51 PM

Detailed explanation of MongoDB efficient backup strategy under CentOS system This article will introduce in detail the various strategies for implementing MongoDB backup on CentOS system to ensure data security and business continuity. We will cover manual backups, timed backups, automated script backups, and backup methods in Docker container environments, and provide best practices for backup file management. Manual backup: Use the mongodump command to perform manual full backup, for example: mongodump-hlocalhost:27017-u username-p password-d database name-o/backup directory This command will export the data and metadata of the specified database to the specified backup directory.

How to set up users in mongodb

Apr 12, 2025 am 08:51 AM

How to set up users in mongodb

Apr 12, 2025 am 08:51 AM

To set up a MongoDB user, follow these steps: 1. Connect to the server and create an administrator user. 2. Create a database to grant users access. 3. Use the createUser command to create a user and specify their role and database access rights. 4. Use the getUsers command to check the created user. 5. Optionally set other permissions or grant users permissions to a specific collection.

How to start mongodb

Apr 12, 2025 am 08:39 AM

How to start mongodb

Apr 12, 2025 am 08:39 AM

To start the MongoDB server: On a Unix system, run the mongod command. On Windows, run the mongod.exe command. Optional: Set the configuration using the --dbpath, --port, --auth, or --replSet options. Use the mongo command to verify that the connection is successful.

How to encrypt data in Debian MongoDB

Apr 12, 2025 pm 08:03 PM

How to encrypt data in Debian MongoDB

Apr 12, 2025 pm 08:03 PM

Encrypting MongoDB database on a Debian system requires following the following steps: Step 1: Install MongoDB First, make sure your Debian system has MongoDB installed. If not, please refer to the official MongoDB document for installation: https://docs.mongodb.com/manual/tutorial/install-mongodb-on-debian/Step 2: Generate the encryption key file Create a file containing the encryption key and set the correct permissions: ddif=/dev/urandomof=/etc/mongodb-keyfilebs=512