UNIX and Linux: The Duo of the Operating Systems

UNIX and Linux are two giants in the operating system field that have profoundly influenced the digital world for decades. Although the two look similar at first glance, a deep analysis reveals their fundamental differences that are of great significance to developers, administrators, and users. This article will explore the nuances between UNIX and Linux in depth, clarifying its historical origins, licensing models, system architecture, community, user interface, market applications, security paradigms and other aspects.

Historical background

UNIX is a pioneer in the field of operating systems, was born at AT&T Bell Labs in the late 1960s. Developed by a team led by Ken Thompson and Dennis Ritchie, it was originally used as a multi-tasking, multi-user platform for research. In the decades that followed, commercialization efforts led to the rise of various proprietary UNIX versions, each targeting a specific hardware platform and industry.

In the early 1990s, Finnish computer science student Linus Torvalds ignited the tinder of the open source revolution by developing the Linux kernel. Unlike UNIX, which is mainly controlled by vendors, Linux leverages the power of collaborative development. The open source nature of Linux attracts the contribution of programmers around the world, thus facilitating rapid innovation and spawning a wide variety of distributions, each with its unique features and uses.

Licensing and distribution

One of the most significant differences between UNIX and Linux is their licensing model. UNIX, as proprietary software, usually requires permission to be used and customized, which limits the extent to which users modify and distribute the system.

Instead, Linux runs under an open source license, most notably the GNU General Public License (GPL). This licensing model allows users to learn, modify and distribute source code freely. The result is a large number of Linux distributions to meet various needs such as user-friendly Ubuntu, stable CentOS and community-driven Debian.

Kernel and system architecture

The architecture of the kernel (the core of the operating system) plays a crucial role in defining its behavior and functionality. UNIX systems usually use a single-core architecture, which means that basic functions such as memory management, process scheduling and hardware drivers are tightly integrated.

Linux also uses a single kernel, but it introduces modularity through loadable kernel modules. This allows kernel functionality to be dynamically expanded without a full system restart. In addition, the nature of Linux collaborative development ensures wider hardware support and adaptability to changing technology environments.

Community and development

A vibrant community is often a hallmark of successful operating systems. Due to the proprietary nature of UNIX, its community engagement has historically been limited. Development and updates are mainly controlled by various manufacturers, resulting in slow adaptation to new technologies.

By contrast, the Linux community thrives with open collaboration as its core. Developers, enthusiasts, and organizations contribute their expertise to enhance the performance, security, and usability of the system. This collaborative spirit ensures Linux is rapidly evolving, with new features and updates rolling out at an amazing speed.

Customization and flexibility

The level of customization provided by the operating system can significantly affect its availability in different environments. Due to UNIX's proprietary implementation, it often limits user customization options. However, manufacturers sometimes customize UNIX solutions based on specific industries, such as mainframes IBM uses for high-performance computing.

On the other hand, Linux's open source features enable users to customize systems widely. This versatility is a great advantage for a variety of applications, from running servers in data centers to powering embedded systems in IoT devices. Linux's adaptability makes it the first choice for technical users looking for customized solutions.

user interface

User interface (UI) is a way for users to interact with the operating system. UNIX systems usually use the command line interface (CLI) as their main interaction method. Although powerful, CLI may have a learning curve for beginners.

Similarly, Linux runs primarily through the CLI. However, recognizing the importance of graphical user interfaces (GUIs), Linux has a variety of desktop environments such as GNOME, KDE, and Xfce. These interfaces enhance the usability of a wider user, both for command line enthusiasts and those seeking a more intuitive experience.

Market share and industry applications

The extent to which UNIX and Linux penetrate into various industries has changed over time. UNIX used to be the dominant force, but market share declined due to its proprietary limitations. Nevertheless, UNIX remains a pillar of industries such as finance and telecommunications, where legacy systems remain.

Linux has developed in various fields with its open source concept. It has been widely used as the basis of web servers, powering the backbone of the Internet. In addition, Linux's cost-effectiveness and versatility make it the first choice for cloud computing environments.

Safety and stability

Security and stability are crucial in the operating system field. UNIX is often considered safer because it has a controlled environment and vendor accountability and has a record of reliability in critical systems.

With its open source development model, Linux prioritizes security through ongoing scrutiny from global communities. The vulnerability is quickly resolved and security updates will be distributed in a timely manner. The features of Linux collaborative development contribute to its powerful security attitude and are even comparable to proprietary systems such as UNIX.

in conclusion

In our exploration of UNIX and Linux, we marvel at the huge difference in defining these two operating systems. UNIX continues to influence industries that require stable performance, thanks to its proprietary legacy. In contrast, Linux's open source foundation has ushered in a new era of collaboration, flexibility and innovation. The key to making an informed choice between UNIX and Linux is to understand their respective pros and cons and how they align with your technical needs. As both systems continue to evolve, now is the perfect time to gain an in-depth understanding of the operating system world and its unlimited potential.

The continued development of UNIX and Linux proves the lasting power of innovation and collaboration. While UNIX has laid the foundation for modern operating systems, Linux uses the potential of open source concepts to democratize software development. As we navigate the increasingly complex digital environment, the exploration of UNIX and Linux reminds us of the transformative nature of technology and the unlimited potential of the future.

The above is the detailed content of UNIX vs Linux: What's the Difference?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1794

1794

16

16

1739

1739

56

56

1590

1590

29

29

1468

1468

72

72

267

267

587

587

Install LXC (Linux Containers) in RHEL, Rocky & AlmaLinux

Jul 05, 2025 am 09:25 AM

Install LXC (Linux Containers) in RHEL, Rocky & AlmaLinux

Jul 05, 2025 am 09:25 AM

LXD is described as the next-generation container and virtual machine manager that offers an immersive for Linux systems running inside containers or as virtual machines. It provides images for an inordinate number of Linux distributions with support

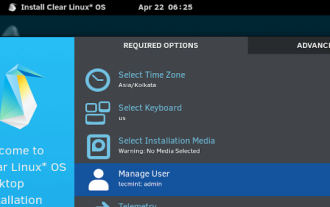

Clear Linux Distro - Optimized for Performance and Security

Jul 02, 2025 am 09:49 AM

Clear Linux Distro - Optimized for Performance and Security

Jul 02, 2025 am 09:49 AM

Clear Linux OS is the ideal operating system for people – ahem system admins – who want to have a minimal, secure, and reliable Linux distribution. It is optimized for the Intel architecture, which means that running Clear Linux OS on AMD sys

How to Hide Files and Directories in Linux

Jun 26, 2025 am 09:13 AM

How to Hide Files and Directories in Linux

Jun 26, 2025 am 09:13 AM

Do you sometimes share your Linux desktop with family, friends, or coworkers? If so, you may want to hide some personal files and folders. The challenge is figuring out how to conceal these files on a Linux system.In this guide, we will walk through

How to create a self-signed SSL certificate using OpenSSL?

Jul 03, 2025 am 12:30 AM

How to create a self-signed SSL certificate using OpenSSL?

Jul 03, 2025 am 12:30 AM

The key steps for creating a self-signed SSL certificate are as follows: 1. Generate the private key, use the command opensslgenrsa-outselfsigned.key2048 to generate a 2048-bit RSA private key file, optional parameter -aes256 to achieve password protection; 2. Create a certificate request (CSR), run opensslreq-new-keyselfsigned.key-outselfsigned.csr and fill in the relevant information, especially the "CommonName" field; 3. Generate the certificate by self-signed, and use opensslx509-req-days365-inselfsigned.csr-signk

7 Ways to Speed Up Firefox Browser in Linux Desktop

Jul 04, 2025 am 09:18 AM

7 Ways to Speed Up Firefox Browser in Linux Desktop

Jul 04, 2025 am 09:18 AM

Firefox browser is the default browser for most modern Linux distributions such as Ubuntu, Mint, and Fedora. Initially, its performance might be impressive, however, with the passage of time, you might notice that your browser is not as fast and resp

How to extract a .tar.gz or .zip file?

Jul 02, 2025 am 12:52 AM

How to extract a .tar.gz or .zip file?

Jul 02, 2025 am 12:52 AM

Decompress the .zip file on Windows, you can right-click to select "Extract All", while the .tar.gz file needs to use tools such as 7-Zip or WinRAR; on macOS and Linux, the .zip file can be double-clicked or unzip commanded, and the .tar.gz file can be decompressed by tar command or double-clicked directly. The specific steps are: 1. Windows processing.zip file: right-click → "Extract All"; 2. Windows processing.tar.gz file: Install third-party tools → right-click to decompress; 3. macOS/Linux processing.zip file: double-click or run unzipfilename.zip; 4. macOS/Linux processing.tar

How to Burn CD/DVD in Linux Using Brasero

Jul 05, 2025 am 09:26 AM

How to Burn CD/DVD in Linux Using Brasero

Jul 05, 2025 am 09:26 AM

Frankly speaking, I cannot recall the last time I used a PC with a CD/DVD drive. This is thanks to the ever-evolving tech industry which has seen optical disks replaced by USB drives and other smaller and compact storage media that offer more storage

How would you debug a server that is slow or has high memory usage?

Jul 06, 2025 am 12:02 AM

How would you debug a server that is slow or has high memory usage?

Jul 06, 2025 am 12:02 AM

If you find that the server is running slowly or the memory usage is too high, you should check the cause before operating. First, you need to check the system resource usage, use top, htop, free-h, iostat, ss-antp and other commands to check CPU, memory, disk I/O and network connections; secondly, analyze specific process problems, and track the behavior of high-occupancy processes through tools such as ps, jstack, strace; then check logs and monitoring data, view OOM records, exception requests, slow queries and other clues; finally, targeted processing is carried out based on common reasons such as memory leaks, connection pool exhaustion, cache failure storms, and timing task conflicts, optimize code logic, set up a timeout retry mechanism, add current limit fuses, and regularly pressure measurement and evaluation resources.